Are You Really Pushing the Limits of Power BI?

Power BI's Vertipaq engine can handle A LOT of data. There are some causes of slow performance that can be easily fixed.

Your Power BI reports are crawling. Page loads feel like an eternity. You're told your data is "too big" and you need an expensive upgrade.

Slow reports are a common growing pain. As your business succeeds, your data scales with it. But here's the truth: Power BI can handle far more than most people realize. The problem usually isn't the platform.

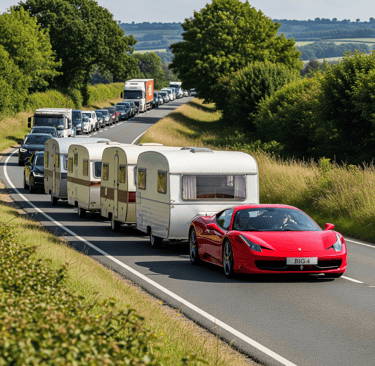

It reminds me of an old music video. William Shatner sees Brad Paisley parking his Ferrari, and runs over to chew him out. Brad gets out, a little sheepish after borrowing his friend’s car, and says “2nd gear sticks a little bit.” William Shatner looks at the car, then at Brad, “You got it into 2nd gear? … How’d you do that?”

The Problem: "Outgrowing" Power BI

I worked with a client in the health sector facing a Power BI "crisis." They hired one of the Big 4 accounting firms to develop a Power BI dashboard, which was buckling under 10GB of data:

Reports took 10-20 seconds to load.

Key metrics that conflicted and changed erratically when filtered.

Manual data refreshes that cost 5 figures every month.

They were told Power BI would soon break entirely. I was with a top BI consulting firm at the time, and we were deeply skeptical about the need for a whole new system. We’ve seen Power BI handle 50-100 million rows of data with just a bit of optimizing, and this client had a small fraction of that.

The client asked us to look under the hood. It turns out the real issue wasn’t the car, it was the mechanic.

Data Modeling Done Wrong

The first thing we noticed was one enormous table. Power BI works best with a Star Schema, breaking up data into manageable chunks to avoid repetition and bloat. The Big 4 developers chose an antiquated technique, the equivalent of piling everything they own into an overstuffed camper.

The second issue was not using Power BI's built-in time intelligence functions—DAX formulas that calculate Year-To-Date (YTD) subtotals, Prior Year (PY) comparisons, and other time-based metrics on the fly. Instead, the Big 4 developers tried to cover every scenario by creating more data and adding it to the model. They duplicated every data point dozens, sometimes hundreds of times to pre-calculate “lookbacks” for basic YTD and PY functions.

Convoluted DAX

One of the key metrics revealed another issue. Multiple developers had clearly worked on the same measure without coordination. They created four measures and calculated columns referring to each other, creating nested CALCULATE() statements many layers deep, with filters that cut each other off like NASCAR drivers. One measure added something to the total, while another subtracted it, all adding to load time. The metrics displayed seemingly erratic results whenever the user changed the filters. It took some time just to figure out what each metric actually included (and confirm that Power BI does indeed do exactly what you tell it to).

Part of this was poor coordination, but part of it was poor modeling, which inevitably makes the DAX more difficult.

Manual Refreshes

The most alarming problem was the monthly refreshes, which got so complicated it needed manual intervention from IT and Accounting. Since everything was hosted on the Big 4 developer’s own platform, it cost tens of thousands in consulting fees every month. Without going into detail, it included:

A 3,000 line SQL query

17 poorly documented python scripts (must be run manually in a specific order)

Manual updates to various tables

When we broke down the SQL and python scripts, we found the same do-undo-redo as in the DAX code. A lot of that complexity was simply unnecessary.

The most egregious finding was the Calendar / Date table, which I normally generate in Power BI to automatically suit the data. The Big 4 developers chose to hard-code the date range, always stopping on the last date in the data (even mid-month / quarter / year). This alone guaranteed the data model needed human intervention for every refresh. That’s like a mechanic putting only 200 miles on your oil change sticker to keep you coming back early and often.

Shifting Gears

It took three days to reverse-engineer and fix the maze of mistakes in the data model, a few more days to review and rework the DAX, and a second engagement to build a fully automated data pipeline. Some of the things I did include:

Broke the single large table into proper fact and dimension tables

Removed duplicate data

Added a proper Calendar / Date table to enable DAX’s native time-intelligence functionality

Cleaned up elaborate, unnecessary DAX code

Analyzed output of the data pipeline (the Big 4 developers kept the code proprietary), and rebuilt a fully automated version in the client’s own Power BI environment.

(Each of these warrant their own post… I’ll come back to link them when they are written.)

Over a few short weeks, we tallied some quick wins:

Reduced 10GB of raw data to a 3MB Power BI file.

Every report page loaded in under a second or two.

• Eliminated the need for manual intervention, enabling daily (or even hourly) refreshes.

Reimagined the filters and visuals to be much more intuitive and user friendly.

The finance team got faster, more reliable reports. The company saved tens of thousands per month. And Power BI? The Vertipaq engine is purring on cruise control.

Ready for a Power BI tune up?

Are you hitting the redline with your Power BI reports? Before you write off Power BI as "not enterprise-ready" or throw money at a more complex solution, let's talk.

I build custom Power BI solutions at the speed needed to keep projects on time and on budget. Contact me today to see how you can upshift your data.

Imagine a Ferrari driving down the highway, towing half a dozen camper trailers, holding up traffic. It’s comically ridiculous, but that's essentially what the Big 4 developers did with their data.